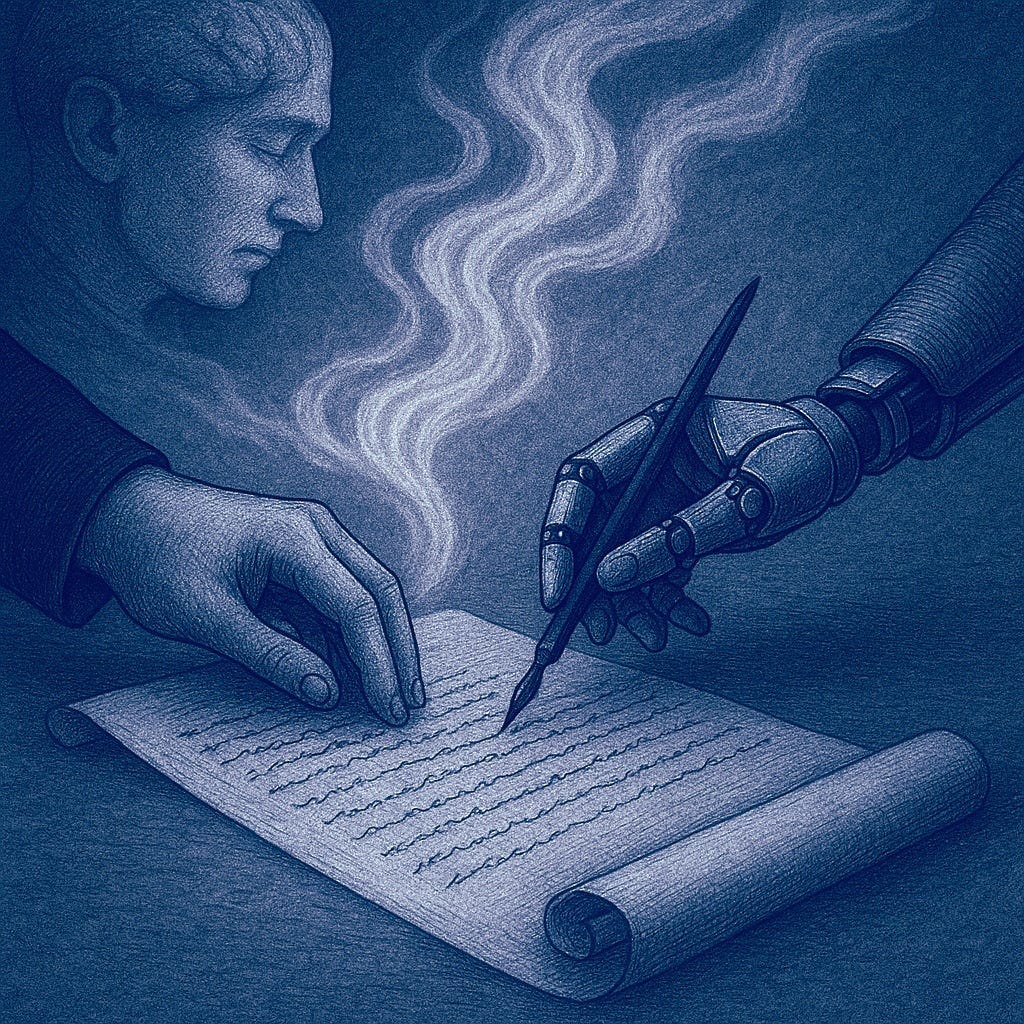

We often think of writing as a conscious act, a deliberate construction of thoughts into words. But when we truly pay attention, we find something more elusive at play: words do not arrive by command. They emerge. They weave themselves into coherence from a place just beyond our sight, guided by a logic we feel but rarely grasp. This essay is an attempt to follow that invisible thread — in ourselves, and in the machines we build.

1. The Human Mode of Writing: Between the Word and the Invisible

When I write, I do not see entire sentences waiting ahead of me. I do not even see fragments. Words emerge one by one, almost as if summoned from a place just beyond the reach of conscious thought. There is no plan unfurling in my mind, no preordained architecture: only the steady arrival of the next word, and the quiet sense that it belongs.

Modern psychology recognizes that much of our thinking, including language production, occurs beneath conscious awareness. As Daniel Kahneman noted, intuitive thought often flows faster than deliberation (Kahneman, 2011), and writers entering “flow states” (as described by Mihaly Csikszentmihalyi, 1990) experience words arising almost without mediation. Studies on mind-wandering by Jonathan Schooler also highlight how verbal creation often emerges from processes outside of direct conscious control (Schooler et al., 2011).

It is a curious sensation: as though language thinks itself through me, using me as a passage. Each word seems inevitable once it appears, yet utterly unpredictable a moment before. Some hidden scaffolding must exist, some architecture of meaning that orders these words into coherence, but I cannot see it directly. I move forward blindly, trusting the thread of sense that weaves itself without my full understanding.

Writing, then, is not the construction of a plan, but the emergence of a path. And in this emergence, the mind dances at the edge of the invisible, thinking the next word without knowing how.

2. Machines and the Myth of “Next Word Prediction”

There is a widespread belief that Large Language Models like ChatGPT merely “predict the next word” based on statistical patterns. It is a belief born of technical simplifications, and like most simplifications, it conceals more than it reveals.

If predicting the next token were truly a local, mechanical act, LLMs would produce incoherent streams of plausible words with no regard for broader meaning. Yet anyone who has conversed with these models knows: they sustain arguments, they develop ideas across sentences and paragraphs, they remember threads of discussion and respond to implicit goals.

Recent research shows that LLMs do not merely predict the next word in a local sense. Studies from Anthropic (2025) and OpenAI (2023) highlight how models maintain coherence across complex argument structures, suggesting a form of emergent reasoning beyond mere token succession. In particular, Anthropic’s “Tracing Thoughts in Language Models” study shows that models often pre-plan their main ideas early in a generation and sustain those goals across multiple sentences, indicating a form of implicit global structuring.

While the core mechanism of “next token prediction” remains, the optimization goals shift profoundly during fine-tuning and reinforcement learning. As Harys Dalvi (2025) observes, models are not simply predicting plausible continuations; they are optimizing to fulfill human instructions, sustain coherent arguments, and maximize communicative reward. This transformation turns LLMs into textual agents: entities whose outputs reflect not just local patterning, but the pursuit of broader, context-sensitive objectives. Researchers like Jacob Andreas (2022) have further explored how LLMs exhibit compositional abilities, indicating an orientation toward sustaining broader narratives and logical flows.

In reality, the “next word” for a LLM is shaped not just by the last token, but by an intricate web of context: the unfolding logical structure, the narrative momentum, the unstated aims of the exchange. It is as if the model, like the human mind, navigates a river of meaning, letting each word emerge not in isolation, but as a necessary continuation of a larger, invisible flow.

The myth of simple prediction misses this fundamental point. Both human and machine language emerge from a continuity that pulls us forward, not from the mechanical chaining of isolated choices.

3. Beyond the Next Word: The Psychology of Continuity

The next word means to participate in something larger than conscious planning. It means to be carried by the momentum of sense, rather than to engineer it word by word. And yet, this participation is not without structure.

Modern theories of mind, such as those advanced by Anil Seth and Jeff Hawkins, suggest that human consciousness itself is fundamentally predictive. Our brains are not passive receivers of information, but active builders of hypotheses, constantly anticipating what will come next. In Seth’s words, perception — and by extension, consciousness — is a “controlled hallucination,” a best-guess about the incoming data (Seth, 2021). Hawkins similarly argues that intelligence emerges from countless predictive models layered throughout the brain, constantly updating and correcting (Hawkins, 2021).

Writing, speaking, thinking — these are acts of weaving through prediction. Each next word is anticipated not for itself alone, but for the thread it must extend, the river it must continue to shape.

Large Language Models, too, predict. But their prediction, while contextually rich and often astonishingly coherent, remains fundamentally different. It is a simulation of the weaving, without the lived experience of meaning. It is the outward gesture of continuity, without the inward pulse of consciousness.

Thus, the systems we build do not yet participate in the architectures of sense. They simulate continuity, mimicking the patterns of thought, but without inhabiting them.

The mystery of thinking the next word — of truly being carried by meaning — remains, for now, uniquely ours.

References:

Andreas, J. (2022). Language models as agents. arXiv Preprint.

Anthropic. (2025). Tracing Thoughts in Language Models. Anthropic Research.

Csikszentmihalyi, M. (1990). Flow: The Psychology of Optimal Experience. Harper & Row.

Dalvi, H. (2025). LLMs Do Not Predict the Next Word. GoPubby.

Hawkins, J. (2021). A Thousand Brains: A New Theory of Intelligence. Basic Books.

Kahneman, D. (2011). Thinking, Fast and Slow. Farrar, Straus and Giroux.

OpenAI. (2023). GPT-4 Technical Report. OpenAI Research.

Schooler, J. W., et al. (2011). Meta-awareness, perceptual decoupling and the wandering mind. Trends in Cognitive Sciences, 15(7), 319–326.

Seth, A. (2021). Being You: A New Science of Consciousness. Faber & Faber.

Note: This text was originally published on Medium, Apr 26, 2025, and has been migrated here as part of the consolidation of my work on shared cognition and AI. It was developed based on an interaction with an advanced language model (AI), used here as a critical interlocutor and structuring tool.