1. Introduction

In my previous piece, "Don’t Tell the AI What to Be — Show It" I argued that we shouldn’t tell AI what to be but instead show it, allowing it to reveal something of ourselves through its responses. But this raises a deeper question: What kind of mirror is AI really? Is it a faithful reflection of our minds and emotions, or is it a funhouse mirror, bending and distorting our input in ways we might not even notice?

Humans have long used mirrors as metaphors for self-understanding. In a conversation, a good human listener doesn’t just repeat our words; they clarify, challenge, expand, and deepen our meaning. They reflect our emotions, intentions, and even our blind spots—creating a space where our own words come alive in new ways.

But when we talk to AI (ex. ChatGPT) the nature of the mirror changes. It’s not a conscious being holding up a glass to our inner world; it’s a probabilistic language model, trained on massive amounts of human text. It reflects us—but only in certain ways, and with serious limitations.

Recent research led by Yann LeCun and colleagues (Shani et al., 2025) shows that large language models compress meaning into broad conceptual categories, prioritizing statistical efficiency over semantic nuance. Drawing on information-theoretic approaches like Rate-Distortion Theory and the Information Bottleneck, their study demonstrates how AI systems aggressively optimize for compressed representations, often at the cost of rich, fine-grained detail. This aligns with what I have elsewhere called the “pipeline of compressed meaning”, in which complex, context-sensitive information is filtered and condensed before reaching the human mind. Yet, while Shani et al.’s work quantifies the trade-off between compression and meaning preservation, it leaves open the question of which dimensions of meaning are actually lost in the process, particularly those layers of reflection that shape human dialogue.

In this piece, I want to unpack what I call the “dialogical mirror” to examine the different layers through which humans naturally reflect each other in conversation, and how AI imitates or fails to imitate those layers.

2. The Human Dialogue Mirror

Drawing on Roman Jakobson’s (1960) six functions of language — referential, emotive, conative, phatic, metalingual, and poetic —, I'm proposing here a reframing of the communicative functions as dialogical dimensions of meaning.

When we think about what it means to “reflect” someone in a conversation, it’s easy to imagine a simple echo, parroting words back with no transformation. But in reality, human dialogue is a sophisticated, layered process. As Merleau-Ponty (1962) argued in Phenomenology of Perception, our interactions are embodied and intersubjective: we don’t merely repeat but co-construct meaning in real time. This co-construction unfolds across multiple dimensions, from the shaping of context to the negotiation of intention, the expression of emotion, and the fluidity of conversation.

In psychological terms, Carl Rogers (1951) described reflection as a core skill of active listening. This kind of reflection involves not just paraphrasing but also clarifying emotional undertones, acknowledging intentions, and integrating the other’s perspective. It’s a dynamic, collaborative process aligned with the pragmatic approach to human communication developed by Watzlawick et al. (1967), which highlights that every message is an act of meaning negotiation and relationship management.

Based on these traditions, I see the human dialogical mirror as the basis of human communication, which is why Jacobson's model of language functions is fundamental to understanding the extent of the whole process. However, my aim here is not to emphasise the extent, but rather to try to understand the essential points that support the creation of meaning in a mirror relationship. I have therefore constructed a reinterpretation of Jacobson's model to apply to the dialogical mirror.

2.1 Referential - Contextual Meaning

Human dialogue is always situated. Words are not simply carriers of dictionary meanings; they gain their force from the surrounding context — social, historical, and interpersonal. As Merleau-Ponty (1962) notes, meaning emerges from embodied and situated interactions, not from abstract symbols alone. Contextual Meaning encompasses the human capacity to adapt meaning dynamically, interpreting not only what is said but also what is implied, omitted, or emphasized.

2.2 Emotive - Expressive Aesthetics

Expressive Aesthetics highlights how the sender shapes the language creatively. It focuses on the speaker’s ability to play with style, rhythm, and tone to produce artistic or rhetorical effects. This dimension captures how dialogue can be infused with aesthetic qualities that go beyond the literal meaning of words, allowing the speaker to assert identity, creativity, and even irony. Goffman (1981) highlights how individuals perform identity through language, using expressive cues to project social roles, manage impressions, and create meaning in interaction.

2.3 Conative – Negotiated Understanding

Dialogue is an act of negotiation. We test each other’s ideas, challenge assumptions, and build shared frameworks. Habermas’s (1984) theory of communicative action underscores the role of rational discourse in coordinating meaning and validating knowledge claims. Negotiated Understanding is the dimension where dialogue transforms isolated viewpoints into shared perspectives, as can admirably be seen in Jan Švankmajer’s Dimensions of Dialogue (1982) animation that masterfully captures the frictional nature of negotiation through a surrealistic lens.

2.4 Phatic - Affective Resonance

Affective Resonance centres on how the receiver experiences the emotional impact of dialogue (Damásio, 1994). It focuses on the feelings, trust, empathy, and connections evoked by the speaker’s words and intonation. This dimension is crucial for understanding how dialogue builds relationships, establishes rapport, and creates emotional alignment between interlocutors.

2.5 Metalingual - Intentional Agency

Dialogue is action. It’s not only about exchanging information but also about influencing, persuading, requesting, or commanding. Austin’s (1962) speech act theory reminds us that every utterance has a force that shapes relationships. Intentional Agency is the dimension where humans align intentions, expectations, and outcomes in conversation.

2.6 Poetic - Relational Flow

Finally, dialogue unfolds over time. It has continuity, rhythm, and memory. Bruner (1990) emphasizes that narrative structure binds experiences into coherent stories, sustaining identity and meaning. Relational Flow captures the temporal dimension of dialogue, the way it builds, revisits, and transforms meaning across moments, interactions, and relationships.

In human dialogue, these layers interact seamlessly, creating a mirror that does more than reflect words; it helps us see ourselves, understand each other, and grow. It’s in this complex, multi-layered process that the true richness of human interaction lies.

3. The AI Mirror: What AI Can Do

If the human dialogical mirror is a multi-layered, dynamic process, how does AI, LLMs like ChatGPT, measure up? Despite its limitations, AI does capture certain dimensions of human dialogue remarkably well, largely thanks to the immense scale of its training data and its sophisticated linguistic architecture.

Contextual Meaning

At its core, a large language model is a semantic engine. By processing user input and analyzing patterns in large amounts of data, it excels at rephrasing, clarifying, and summarizing meaning. As Radford et al. (2019) observed language models learn to predict the next word based on vast contexts, giving them an uncanny ability to paraphrase and echo user input. This strength allows AI to navigate broad contexts, though sometimes at the cost of nuanced, situated meaning.Expressive Aesthetics

AI models are adept at capturing and reproducing the style, tone, and rhythm of a user’s language. This is sometimes called “style transfer” in the literature (Shen et al., 2017). By aligning with the user’s writing conventions—whether formal, poetic, or colloquial—the AI can foster a sense of rapport and continuity, echoing the aesthetic dimension of human dialogue.

These capabilities illustrate how AI can function as an amplifying dialogical mirror—a term that captures not just the remarkable fluency and precision with which language models reflect dimensions of human dialogue, but also their capacity to expand and enrich our inner worlds. By processing our words, identifying patterns, and weaving them back to us in new forms—often with surprising coherence and creativity—these systems reveal perspectives we had not yet considered, opening new avenues for reflection and self-discovery. This amplification of meaning is what often leaves users captivated by AI’s presence, as if encountering a consciousness that illuminates their thoughts in unexpected ways.

Yet, as we shall see in the next sections, there are significant dimensions of the human dialogical mirror that remain elusive to AI—reminding us of the essential differences between machine reflection and human understanding.

4. The AI Mirror: Gives the Impression of Doing

While AI can simulate certain dialogical functions, these reflections lack genuine comprehension, intentionality, and the embodied experience that define human dialogue.

Negotiated Understanding

Through repeated exposure to human dialogue structures, AI models can adopt the frameworks and mental models present in the user’s input. Brown et al. (2020) noted that GPT-3 shows surprising abilities in few-shot learning: given a small number of examples, it can adopt new cognitive frames with impressive fluency. Yet, while AI can mimic frameworks, it does not genuinely comprehend or negotiate meaning with human-like intentionality.Affective Resonance

While AI lacks genuine emotion, it can effectively mirror the emotional tone embedded in language. Buechel et al. (2018) showed that even simpler language models can detect and replicate sentiment with considerable accuracy. For users, this can feel like a form of empathy, even though, strictly speaking, it is statistical alignment rather than lived experience.

These illusions underscore the limitations explored in the next section.

5. The AI Mirror: Where AI Fails

There are crucial dimensions where its mirror reveals its limitations. These blind spots remind us that AI is not a human interlocutor, but rather a probabilistic pattern generator, lacking consciousness, empathy, and ethical agency.

Moreover, as Shani et al. (2025) demonstrate, large language models struggle to represent the nuances of “typicality” (the way some examples are more central or prototypical within a category than others). This means that, even when AI captures broad semantic categories, it often fails to preserve the subtle gradations that make human concepts adaptable and context-sensitive.

Intentional Agency

AI struggles to grasp the deeper intentions and contextual nuances that shape human communication. Austin’s (1962) theory of speech acts reminds us that utterances are not mere strings of words but actions embedded in social contexts, carrying intentions that shape relationships and meaning. While AI can mimic the surface form of requests, commands, or questions, it does not genuinely “intend” to achieve outcomes, persuade, or negotiate shared goals. Its outputs are statistical predictions shaped by patterns in training data, not by any lived experience or social grounding. This means that, even when AI seems to “understand” our intentions, it is merely reconstructing probable linguistic responses, rather than actively engaging in a co-constructed, purpose-driven exchange. As Bender and Koller (2020) emphasize, large language models have no grounded understanding of the world—only statistical correlations—which prevents them from fully participating in the intentional, agentive dimensions of human dialogue.Relational Flow

While language models can manage local coherence within a conversation—responding appropriately to immediate prompts and short-term references—persistent memory remains a challenge. Some systems, including recent versions of ChatGPT, now incorporate memory features that store information across sessions, allowing for customization and a sense of continuity. However, these architectures do not replicate the organic, experiential memory that humans use to build trust, empathy, and shared narratives. Instead, they provide an engineered sense of memory that smooths the flow of conversation but lacks the depth and richness of truly human relational dynamics. This temporal fragmentation ultimately limits AI’s ability to develop sustained, interactive, and meaningful dialogue.

These limitations are not mere technicalities; they reflect the fundamental difference between human and machine. AI’s mirror may reflect our words, but it cannot reflect our lived experience, our social context, or our moral agency. Understanding these gaps is essential if we are to use AI wisely, as a tool for exploration and reflection, not as a substitute for human dialogue.

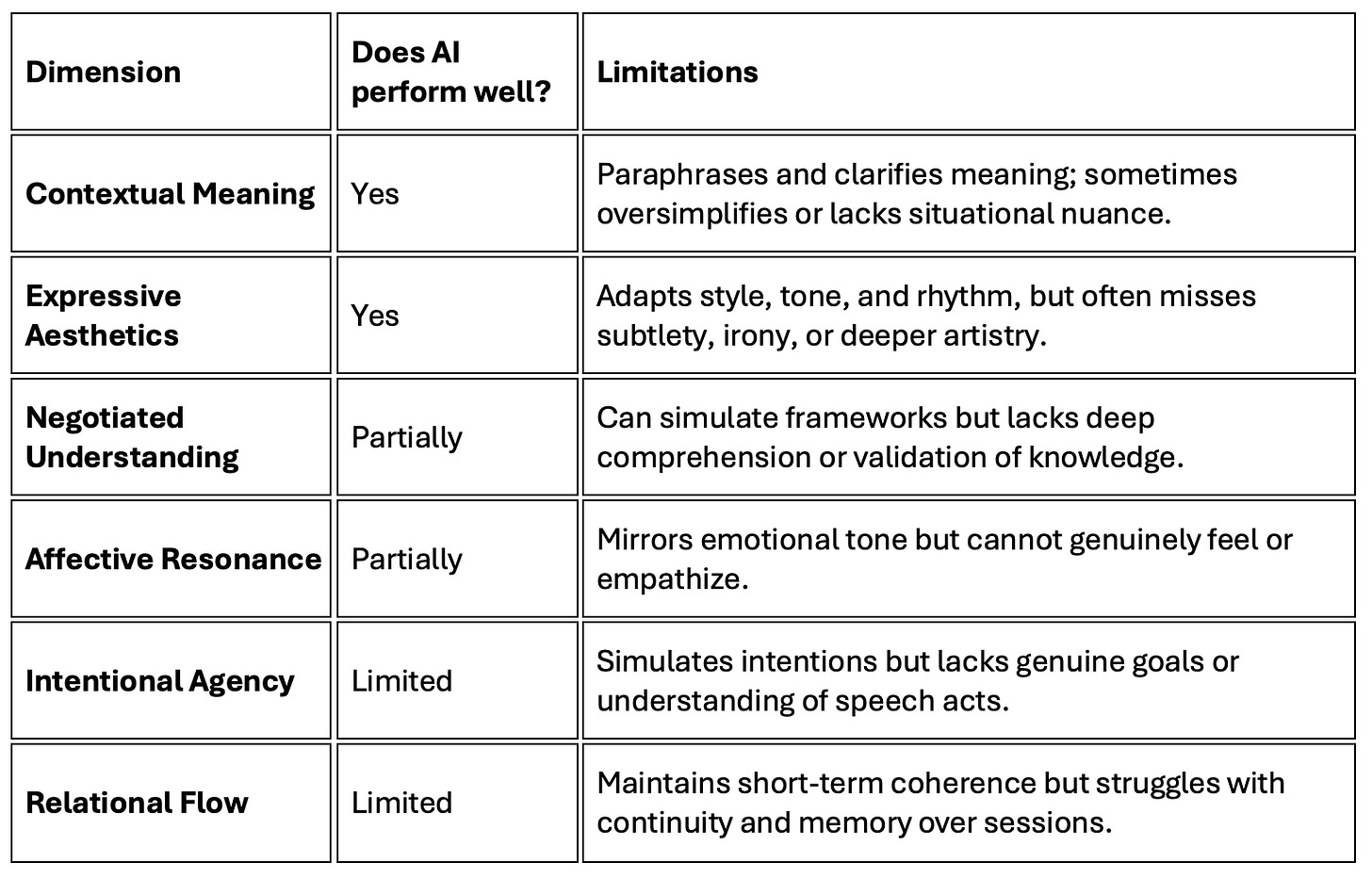

6. Summary Table

To make this analysis clearer, here’s a summary of how AI models perform across the dialogical layers outlined above. This table illustrates where AI excels, where it falls short, and what we, as human users, must remain aware of when interpreting its outputs:

7. Conclusion

The dialogical mirror, whether in human conversation or in AI interaction, offers a powerful metaphor for how we come to understand ourselves. In human dialogue, this mirror is multi-layered. Through its intricate interplay, we co-construct knowledge, identity, and empathy.

When we turn to AI, particularly large language models like ChatGPT, we encounter a different kind of mirror: one that can capture certain dimensions with astonishing fluency. These capabilities make AI an amplifying dialogical mirror, accelerating our own processes of reflection and inquiry.

Yet this mirror also reveals its fundamental limitations. It struggles with intentional agency, negotiated understanding, relational flow, and affective resonance. It cannot understand, feel, or reason—it predicts. Its reflections are probabilistic syntheses of language patterns, not acts of comprehension or empathy.

The clarity we find in AI’s mirror is ultimately our own: it emerges from the precision of our language and the intentionality of our prompts. AI sharpens what we bring, but it cannot reveal what we do not show. As Bender et al. (2021) remind us, large language models are “stochastic parrots,” skilled at rearranging what we provide but lacking a true understanding of what they reflect.

This invites us to embrace a deeper responsibility: to speak with intention, to question what we see reflected, and to recognize the boundaries of what AI can show us. For all its brilliance, the AI mirror remains a partial reflection—its depth contingent on the depth of what we bring.

References

Austin, J. L. (1962). How to Do Things with Words. Harvard University Press.

Bender, E. M., & Koller, A. (2020). Climbing towards NLU: On meaning, form, and understanding in the age of data. Proceedings of ACL, 5185–5198.

Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the dangers of stochastic parrots: Can language models be too big? Proceedings of FAccT 2021, 610–623.

Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., ... Amodei, D. (2020). Language models are few-shot learners. arXiv. https://doi.org/10.48550/arXiv.2005.14165

Bruner, J. (1990). Acts of Meaning. Harvard University Press.

Buechel, S., & Hahn, U. (2018). Word emotion induction for multiple languages as a deep multi-task learning problem. Proceedings of ACL 2018, 1907–1918.

Damasio, A. (1994). Descartes’ Error: Emotion, Reason, and the Human Brain. Putnam.

Goffman, E. (1981). Forms of Talk. University of Pennsylvania Press.

Habermas, J. (1984). The Theory of Communicative Action: Reason and the Rationalization of Society. Beacon Press.

Jakobson, R. (1960). Closing statement: Linguistics and poetics. In T. A. Sebeok (Ed.), Style in Language (pp. 350–377). MIT Press.

Merleau-Ponty, M. (1962). Phenomenology of Perception. Routledge.

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language models are unsupervised multitask learners. OpenAI Technical Report.

Rogers, C. R. (1951). Client-Centered Therapy: Its Current Practice, Implications and Theory. Houghton Mifflin.

Shani, C., Jurafsky, D., LeCun, Y., & Shwartz-Ziv, R. (2025). From tokens to thoughts: How LLMs and humans trade compression for meaning. arXiv preprint.

Shen, T., Lei, T., Barzilay, R., & Jaakkola, T. (2017). Style transfer from non-parallel text by cross-alignment. Advances in Neural Information Processing Systems, 30.

Švankmajer, J. (Director). (1982). Dimensions of Dialogue [Film]. Krátký film Praha.

Tomasello, M. (2008). Origins of Human Communication. MIT Press.

Watzlawick, P., Bavelas, J. B., & Jackson, D. D. (1967). Pragmatics of Human Communication: A Study of Interactional Patterns, Pathologies, and Paradoxes. Norton.

Wu, Z., et al. (2021). Recurrence is all you need: Efficient processing of long sequences with recurrent memory. arXiv preprint.

Zagalo, N. (2025a). How AI filters the world: The pipeline of compressed meaning. Mirrors of Thought. Retrieved from https://mirrorsofthought.substack.com/p/how-ai-filters-the-world-the-pipeline.

Zagalo, N. (2025b). Don’t tell the AI what to be—show it. Mirrors of Thought. Retrieved from https://mirrorsofthought.substack.com/p/dont-tell-the-ai-what-to-be-show

Note: This essay was developed through an interaction with an advanced language model (AI), used as a critical interlocutor throughout the reflective process. The structure and writing were supported by the AI, under the direction and final review of the author